The Canadian Planetary Emulation Terrain Energy-Aware Rover Navigation Dataset

Clearpath Husky rover operating at the Canadian Space Agency’s Mars Emulation Terrain (MET) during a data collection run.

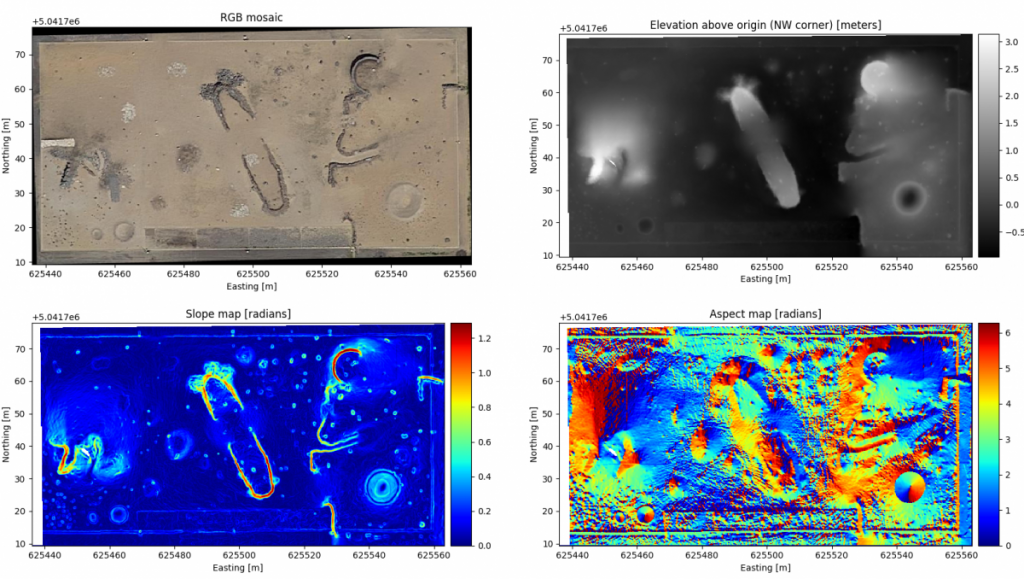

We have collected a unique energy-aware navigation dataset at the Canadian Space Agency’s Mars Emulation Terrain (MET) in Saint-Hubert, Quebec, Canada. This dataset consists of raw and post-processed sensor measurements collected by our rover in addition to georeferenced aerial maps of the MET (colour mosaic, elevation model, slope and aspect maps). The data are available for download below in human-readable format and rosbag (.bag) format.

The dataset is described in this journal article. Python data fetching and plotting scripts and ROS-based visualization tools are available in the dataset’s repository.

Hardware Overview

The rover used for data collection was a Clearpath Husky UGV, a four-wheeled, skid-steered mobile robot with its own battery and two motors (for the wheels on the left and right sides of the vehicle). The main computer supporting control and data logging was powered by a separate 12 V deep cycle rechargeable battery. Battery operation was preferred over a gasoline generator to ensure the rover’s weight did not change with time, avoiding variations in the interactions with the terrain surface.

The rover carried a suite of sensors mounted on an aluminum mast near the front of the vehicle. Sensors on board included an Occam Vision Group omnidirectional stereo camera (composed of 10 individual RGB cameras, each with a resolution of 752 x 480 pixels), a monochrome Point Grey Flea3 camera with a resolution of 1280 x 1024 pixels, and an Apogee E-804-SP-420 pyranometer. The Flea3 camera was tilted slightly downwards to enable imaging of the terrain directly in front of the rover. The pyranometer was installed on top of the omnidirectional camera, making it possible to measure solar irradiance at all tilt angles (and to further estimate solar power generation). A LORD MicroStrain 3DM-GX3-25 inertial measurement unit (IMU) was installed near the base of the sensor mast. Positioning information was provided by a NovAtel Smart6-L GPS receiver at the rear of the Husky platform.

Data Overview

All data products are now available on this IEEE DataPort page! To access them, you need to create a (free) IEEE DataPort account and be logged in. The information on the current page remains the main reference for this dataset.

The entire dataset is separated into six different runs, each covering different sections of the MET at different times. The data was collected on September 4, 2018 between 17:00 and 19:00 (Eastern Daylight Time). The data is available in both human-readable format and in rosbag (.bag) format.

To avoid extremely large files, the rosbag data of every run was broken down into two parts: “runX_clouds_only.bag” and “runX_base.bag”. The former only contains the point clouds generated from the omnidirectional camera raw images after data collection, and the latter contains all the raw data and the remainder of the post-processed data. Both rosbags possess consistent timestamps and can be merged together using bagedit for example. A similar breakdown was followed for the human-readable data.

Aside from point clouds, the post-processed data of every run includes a blended cylindrical panorama made from the omnidirectional sensor images, planar rover velocity estimates from wheel encoder data and an estimated global trajectory obtained by fusing GPS and stereo imagery coming from cameras 0 and 1 of the omnidirectional sensor using VINS-Fusion later combined with the raw IMU data. Global sun vectors and relative ones (with respect to the rover’s base frame) were also calculated using the Pysolar library. This library also provided clear-sky direct irradiance estimates along every pyranometer measurement collected.

Aerial maps (UTM coordinates, zone 18T)

Aerial maps available on our IEEE DataPort page: raster_data.tar.gz (2.6 MB)

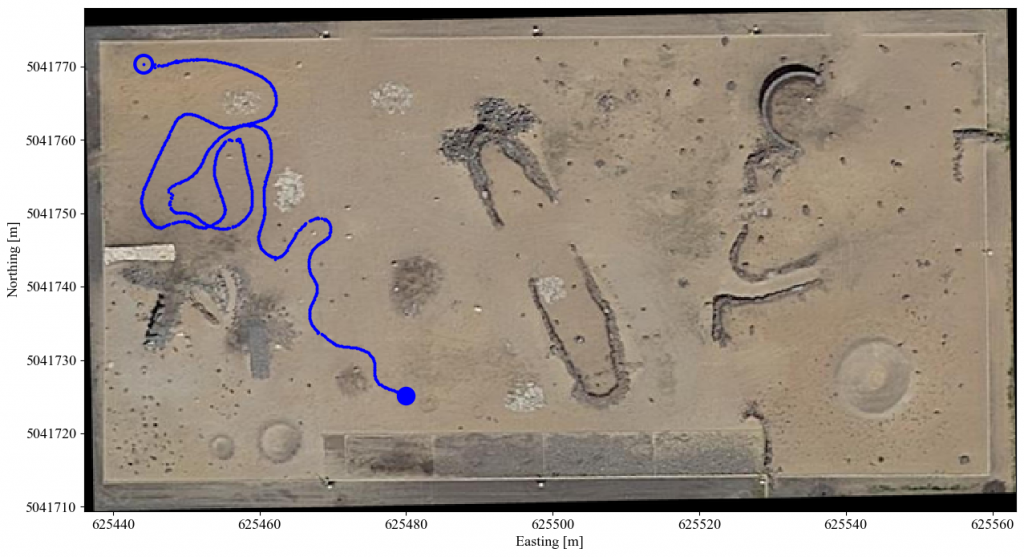

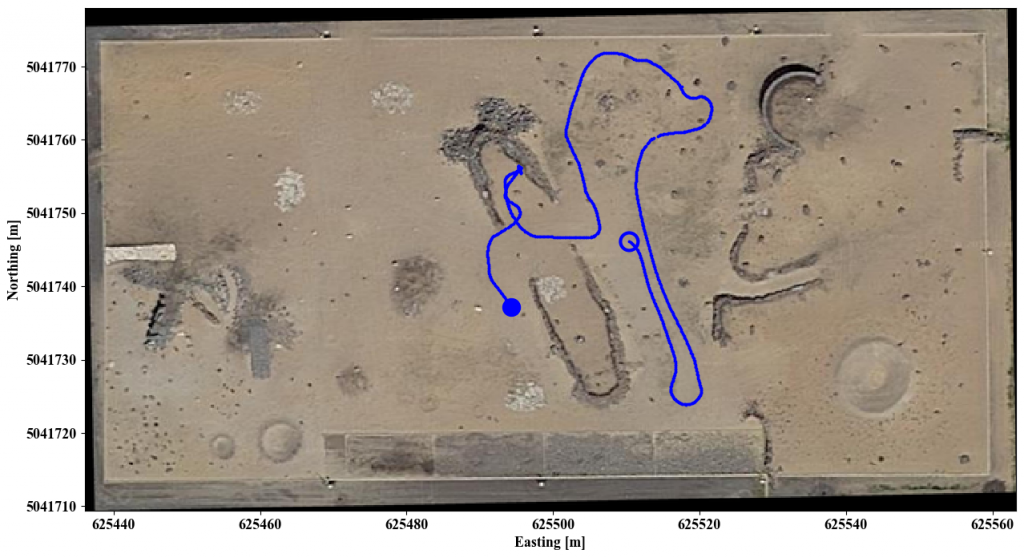

Run 1

Length: 189 m

Start local time (EDT): 17:19:41

Duration: 526 s (8 minutes 46 seconds)

Trajectory employed in run 1. The start and end locations are marked by a hollow circle and full circle, respectively.

Run 1 data products are available on our IEEE DataPort page:

– Human-readable (base) data: run1_base_hr.tar.gz (16.8 GB)

– Human-readable (clouds only) data: run1_clouds_only_hr.tar.gz (54.6 GB)

– Rosbag (base) data: run1_base.tar.gz (24.7 GB)

– Rosbag (clouds only) data: run1_clouds_only.tar.gz (28.7 GB)

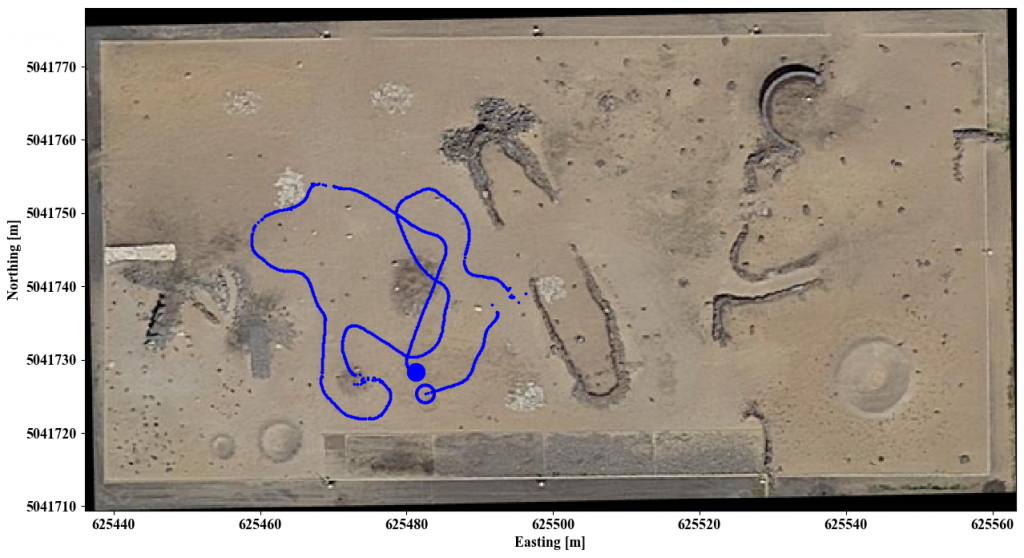

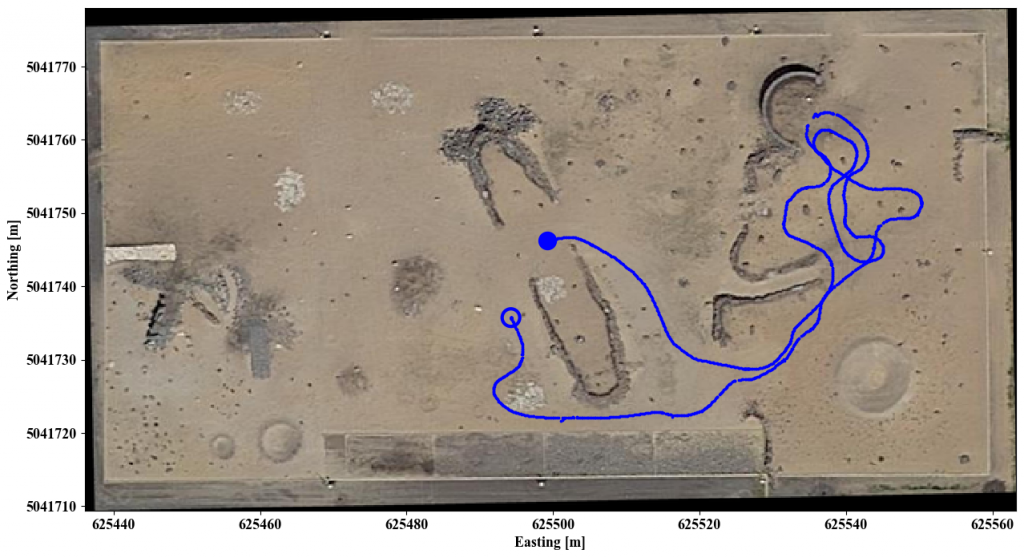

Run 2

Length: 191 m

Start local time (EDT): 17:31:45

Duration: 490 s (8 minutes 10 seconds)

Trajectory employed in run 2. The start and end locations are marked by a hollow circle and full circle, respectively.

Run 2 data products are available on our IEEE DataPort page:

– Human-readable (base) data: run2_base_hr.tar.gz (15.2 GB)

– Human-readable (clouds only) data: run2_clouds_only_hr.tar.gz (52.3 GB)

– Rosbag (base) data: run2_base.tar.gz (22.3 GB)

– Rosbag (clouds only) data: run2_clouds_only.tar.gz (24.7 GB)

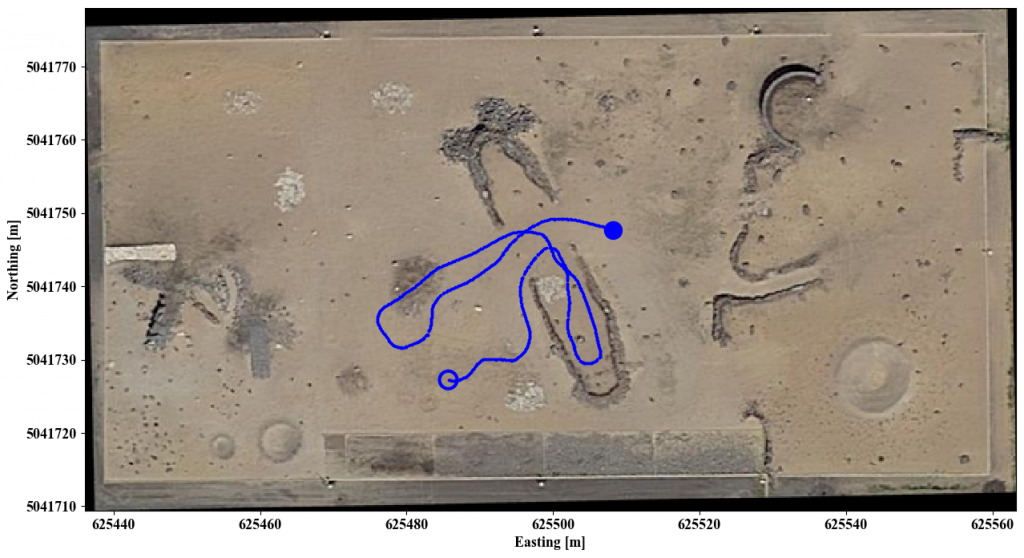

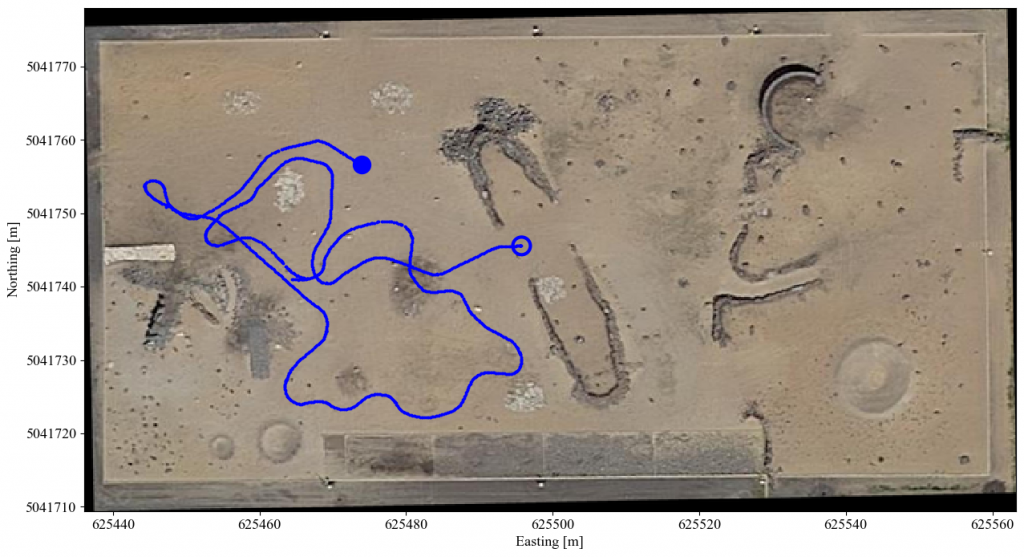

Run 3

Length: 146 m

Start local time (EDT): 17:43:25

Duration: 367 s (6 minutes 7 seconds)

Trajectory employed in run 3. The start and end locations are marked by a hollow circle and full circle, respectively.

Run 3 data products are available on our IEEE DataPort page:

– Human-readable (base) data: run3_base_hr.tar.gz (11.2 GB)

– Human-readable (clouds only) data: run3_clouds_only_hr.tar.gz (38.2 GB)

– Rosbag (base) data: run3_base.tar.gz (17.4 GB)

– Rosbag (clouds only) data: run3_clouds_only.tar.gz (20.3 GB)

Run 4

Length: 167 m

Start local time (EDT): 18:14:32

Duration: 457 s (7 minutes 37 seconds)

Trajectory employed in run 4. The start and end locations are marked by a hollow circle and full circle, respectively.

Run 4 data products are available on our IEEE DataPort page:

– Human-readable (base) data: run4_base_hr.tar.gz (12.4 GB)

– Human-readable (clouds only) data: run4_clouds_only_hr.tar.gz (23.6 GB)

– Rosbag (base) data: run4_base.tar.gz (19.5 GB)

– Rosbag (clouds only) data: run4_clouds_only.tar.gz (26.5 GB)

Run 5

Length: 260 m

Start local time (EDT): 18:23:08

Duration: 693 s (11 minutes 33 seconds)

Trajectory employed in run 5. The start and end locations are marked by a hollow circle and full circle, respectively.

Run 5 data products are available on our IEEE DataPort page:

– Human-readable (base) data: run5_base_hr.tar.gz (18.5 GB)

– Human-readable (clouds only) data: run5_clouds_only_hr.tar.gz (75.6 GB)

– Rosbag (base) data: run5_base.tar.gz (28.9 GB)

– Rosbag (clouds only) data: run5_clouds_only.tar.gz (39.1 GB)

Run 6

Length: 262 m

Start local time (EDT): 18:34:59

Duration: 708 s (11 minutes 48 seconds)

Trajectory employed in run 6. The start and end locations are marked by a hollow circle and full circle, respectively.

Run 6 data products are available on our IEEE DataPort page:

– Human-readable (base) data: run6_base_hr.tar.gz (20.6 GB)

– Human-readable (clouds only) data: run6_clouds_only_hr.tar.gz (75.1 GB)

– Rosbag (base) data: run6_base.tar.gz (29.6 GB)

– Rosbag (clouds only) data: run6_clouds_only.tar.gz (38.8 GB)

Miscellaneous

Available on on our IEEE DataPort page:

– Rover frames transforms: rover_transforms.txt (1.9 KB)

– Cameras intrinsics: ameras_intrinsics.txt (1.4 KB)

Citation

@article{lamarre2020canadian,

author = {Lamarre, Olivier and Limoyo, Oliver and Mari{\'c}, Filip and Kelly, Jonathan},

title = {{The Canadian Planetary Emulation Terrain Energy-Aware Rover Navigation Dataset}},

journal = {The International Journal of Robotics Research},

year = {2020},

doi = {10.1177/0278364920908922},

URL = {https://doi.org/10.1177/0278364920908922},

publisher={SAGE Publications Sage UK: London, England}

}

Questions?

Please take a look at our list of clarifications and corrections on our IEEE DataPort page: clarifications_and_corrections.txt (0.9 KB).

If you encounter any problem using the scripts in our GitHub repository, please open an issue. Any other questions/inquiries/feedback can be sent to Olivier Lamarre at olivier.lamarre@robotics.utias.utoronto.ca.

About the Authors

Olivier Lamarre is a Ph.D. student with the STARS Laboratory; he is working on energy-aware planning for rovers using surface and orbital data in cooperation with NASA JPL.

Oliver Limoyo is also a Ph.D. student with the STARS Laboratory and is working on enabling mobile manipulators to learn how to interact with unstructured and dynamic environments.

Filip Marić was a Ph.D. student with the LAMoR group at the University of Zagreb and the STARS Laboratory.

Dr. Jonathan Kelly is the Director of the STARS Laboratory, and has research interests in 3D computer vision, probabilistic modelling, estimation theory, and machine learning.