Mission Statement

Prof. Kelly with our mobile manipulator platform on the day of its arrival at the laboratory.

We envision a future in which robotic systems are much more pervasive, persistent, and perceptive than at present:

- pervasive: widely deployed in assistive roles across the spectrum of human activity (robots everywhere!)

- persistent: able to operate reliably and independently for long durations, on the order of days, weeks, or more, and

- perceptive: aware of their environment, and capable of acting intelligently when confronted with new situations and experiences.

Towards this end, the STARS Laboratory carries out research at the nexus of sensing, planning, and control, with a focus on the study of fundamental problems related to perception, representation, and understanding of the world. Our goal is to enable robots to carry out their tasks safely in challenging, dynamic environments, for example, in homes and offices, on road networks, underground, underwater, in space, and on remote planetary surfaces. We work to develop power-on-and-go machines that are able to function from day one without a human in the loop.

To make long-term autonomy possible, we design probabilistic algorithms that are able to deal with uncertainty, about both the environment and the robot’s own internal state over time. We use tools from estimation theory, learning, and optimization to enable perception for efficient planning and control. Our research relies on the integration of multiple sensors and sensing modalities – we believe that rich sensing is a necessary component for the construction of truly robust and reliable autonomous systems. An important aspect of our research is the extensive experimental validation of our theoretical results, to ensure that our work is useful in the real world. We are committed to robotics as a science, and emphasize open source contributions and reproducible experimentation.

Current Research Directions and Projects

The Laboratory is actively involved in a variety of research projects and collaborative undertakings with our industrial partners. New projects are always in development. Details on several active projects are provided below. If a project interests you, please consider joining us!

-

Our Clearpath Ridgeback mobile base with the UR10 arm and Robotiq 3-fingered hand.

Collaborative Mobile Manipulation in Dynamic Environments

Cobots, or collaborative robots, are a class of robots intended to physically interact with humans in a shared workspace. We have recently begun exploring research problems related to various tasks for cobots, including collaborative manipulation, transport, and assembly. We are working to develop high performance, tightly-coupled perception-action loops for these tasks, making use of rich multimodal sensing. Experiments are carried out on an advanced mobile manipulation platform based on a Clearpath Ridgeback omnidirectional mobile base and a Universal Robotics UR-10 arm.Our manipulator is a state-of-art platform, which is unique in Canada at present. Much of our testing takes place in our on-site Vicon motion capture facility, which allows for ground truth evaluation of our algorithms, We have also invested substantial effort in developing methods to self-calibrate these lower-cost platforms automatically, with an eye towards long-term deployment in a wide range of environments. -

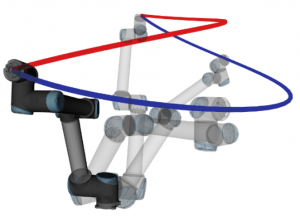

We generate smooth trajectories that have high manipulability throughout the entire path.

Planning Highly Dexterous Manipulation Trajectories

Many autonomous manipulation problems, such as stacking boxes or bin-picking, require the robot to periodically reassess its trajectory and alter its planned movement in response to unforeseen changes in the environment. As a result, a manipulator robot can easily find itself in a joint configuration that is at or near a singularity, which results in a complete loss of mobility in certain directions of the task space. A significant challenge lies in generating motion plans that avoid placing the robot in or near such configurations, as this can make it impossible for the robot to adapt its movement at a later point in time. Our research is focused on ways to augment existing motion planning methods such that they generate singularity-robust trajectories, as well as novel optimization criteria which aid in improving pre-existing motion plans. Our goal is to make autonomous manipulation safer and more predictable in scenarios such as human-robot collaboration. -

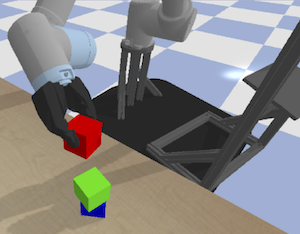

Our work leverages simulation tools and real-world robots for training and testing.

Learning Robust Deep Models for Manipulation

Despite many impressive recent advances, intelligent robotic manipulation continues to be very challenging. Many manipulation systems are built from numerous brittle, independent components and can easily fail. We are investigating data-driven approaches to robotic manipulation; specifically, we are considering model-based, multimodal (multisensor) methods for representation learning and model-free methods based on human intervention for task learning. Our goal is to enable mobile manipulators to learn to complete difficult tasks that require varied environment interactions, including reaching, pushing, sliding, inserting, grasping, and placing, without encoding physical constraints by hand. Our current work explores ways to make learning methods more robust to challenging conditions that may potentially be encountered during deployment (obstructions, change of camera viewpoint, noise, etc). In addition to our laboratory mobile manipulation platform, we also make extensive use of simulation tools for training, testing, and transfer learning. -

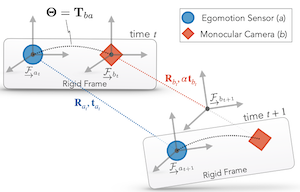

We are developing certifiable perception algorithms that guarantee optimality when a solution is found.

Certifiably Optimal Perception

Robots that must be able to safely collaborate with humans in complex, dynamic environments will require a breakthrough in robust and reliable perception. To this end, a great deal of recent work on SLAM and other key robotic estimation problems has focused on efficient methods that leverage the theory of convex relaxations to produce certifiably optimal solutions. These certifiable algorithms allow a robot to determine when such estimates are globally optimal with respect to the measurement data, which enables verifiable perception systems that can autonomously detect and rectify uncertain or incorrect estimates. We have developed certifiable algorithms for the important class of hand-eye or sensor egomotion calibration problems. Our current work in this area is exploring ways to combine certifiable algorithms with learned models, where the learned models can exhibit state-of-the-art performance on perception tasks but lack the interpretability and performance guarantees of classical state estimators. -

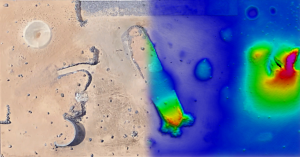

Orbital imagery and elevation model of the Canadian Space Agency’s Analogue Terrain. We have made an extensive rover navigation dataset publicly available.

Energy-Aware Planning for Planetary Navigation

Over the next decade and beyond, solar-powered rovers that will be sent to Mars will be required to drive long distances in short amounts of time. Since energy availability has always been an important constraint in planetary exploration, proper energy management and clever navigation planning will be essential to the success of theses missions. At present, high-resolution orbital imagery and topography data of the Martian surface is primarily interpreted by human operators as part of long-term activity planning. We are investigating how such high-resolution data can be used to automatically plan long-distance traverses that will minimize navigation energy consumption. This research aims at enabling global energy-efficient path planning to better inform tactical and precise ground planetary navigation. We have also released an extensive dataset for related studies. -

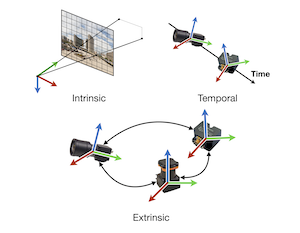

We study intrinsic, extrinsic, and spatiotemporal calibration of multisensor and sensor-actuator systems.

Robot Self-Calibration for Power-On-and-Go Operation

We are interested in building robots that are able to ‘power-on-and-go’, operating effectively out-of-the-box from day one. Multisensor systems offer a variety of compelling advantages for power-on-and-go systems, including improved task accuracy and robustness. However, correct data fusion requires precision calibration of all of the sensors (and potentially the actuators) involved. Calibration is typically a time-consuming and difficult process. Our lab has a long history of developing intrinsic and extrinsic spatiotemporal self-calibration algorithms for various sensor and actuator combinations (e.g., lidar-IMU, lidar-camera, camera-IMU, camera-manipulator, 2D and 3D mm-Wave radar-camera, etc.). We have recently begun exploring improvements to self-calibration through trajectory optimization, informed by both sensor noise levels and system dynamics; noise is unavoidable in the real world, but is often ignored when considering the problem of self-calibration. The aim of our work is ultimately to achieve fully automatic calibration of multisensor and sensor-actuator systems in arbitrary environments, removing the burden of manual calibration and enabling long-term operation.

Past Research Projects

Our research work has followed several threads related to pervasive, persistent, and perceptive robots. The projects below are no longer currently active, although in many cases we continue to support related code repositories.

-

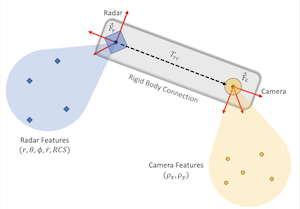

Radar sensors can provide range, bearing, range rate, and cross-section measurements.

Millimetre-Wave Radar for Navigation and Localization

Radar is a weather-robust sensing modality that is potentially valuable for many navigation and localization tasks. Millimetre-wave radar units are already deployed on many vehicles, and more advanced devices (that are able to provide 3D velocity information) will be available in the near term. We are integrating millimetre-wave radar with other sensors to provide an all-weather navigation and localization solution. An additional advantage of radar is the ability to estimate ego-velocity directly without the need for data association. Fusion of radar data with other sensor information requires accurate calibration; current radar calibration algorithms require specialized targets and generally restrict the calibration process to a laboratory environment. Instead, we are exploring targetless radar-based extrinsic calibration, enabling all end-users to perform their own calibration. -

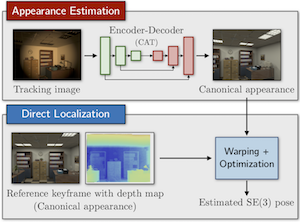

A learned Canonical Appearance Transformation improves visual localization under illumination change.

Appearance Modelling for Long-term Visual Localization

Environmental appearance change presents a significant impediment to long-term visual localization, whether due to illumination variation over the course of a day, changes in weather conditions, or seasonal appearance variations. We are exploring the use of deep learning to help solve the difficult problem of establishing appearance-robust geometric correspondences, while still retaining the accuracy and generality of classical model-based localization algorithms. One approach is to learn a Canonical Appearance Transformation (CAT) that transforms images to correspond to a consistent canonical appearance, such as a previously-seen reference appearance. The transformed images can then be used in combination with existing localization techniques. We have investigated several formulations of this problem, including bespoke nonlinear mappings for feature matching under appearance change and mappings based on colour constancy theory, with the goal of evaluating their usefulness for long-term autonomy applications. -

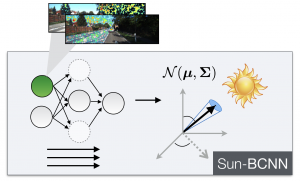

Sun-BCNN uses deep learning to infer the direction of the sun in monocular images.

Visual Sun Sensing

Observing the direction of the sun can help mobile robots orient themselves in unknown environments; when the time of day is known, the sun can be used as an absolute bearing reference to substantially reduce heading drift for robust visual navigation. Although it possible to deploy hardware sun sensors, we have instead developed machine learning techniques that are able to infer the direction of the sun in the sky from a single RGB image. With such an approach, any platform that already uses RGB images for visual localization (e.g., for visual odometry) can extract global orientation information without any additional hardware. Towards this end, we have published three papers on classical and modern deep-learning-based methods that can reliably determine the direction of the sun and reduce dead-reckoning error during visual localization. -

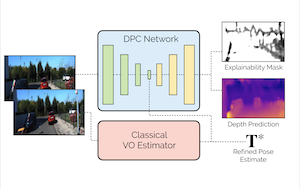

The DPC-Network framework enables corrections to be applied to classical visual motion estimation pipelines. Our self-supervised approach allows for training without ground truth pose information.

Deep Pose Correction for Visual Localization

We are working on ways to fuse the representational power of deep networks with classical model-based probabilistic localization algorithms. In contrast to methods that completely replace a classical visual estimator with a deep network, we propose an approach that uses deep neural networks to learn difficult-to-model corrections to the estimator from ground-truth training data. We name this type of network Deep Pose Correction (DPC-Net) and train it to predict corrections for a particular estimator, sensor and environment. To facilitate this training, we are exploring novel loss functions for learning SE(3) corrections through matrix Lie groups and different network structures for probabilistic regression on constrained surfaces. When ground truth is not available, we employ a self-supervised photometric reconstruction loss that requires only a video stream to facilitate network training. The self-supervised formulation allows the network to be fine-tuned for specific environments. -

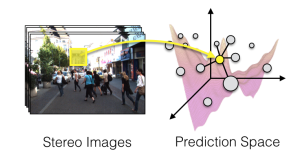

PROBE and PROBE-GK map from features to a prediction space.

Machine Learning for Predictive Noise Modelling

Many robotic algorithms for tasks such as perception and mapping treat all measurements as being equally informative. This is typically done for reasons of convenience or ignorance – it can be very difficult to model noise sources and sensor degradation, both of which may depend on the environment in which the robot is deployed. In contrast, we are developing a suite of techniques (under the moniker PROBE, for Predictive RObust Estimation) that intelligently weight observations based on a predicted measure of informativeness. We learn a model of information content from a training dataset collected within a prototypical environment and under relevant operating conditions; the learning algorithm can make use of ground truth or rely on expectation maximization. The result is a principled method for taking advantage of all relevant sensor data. -

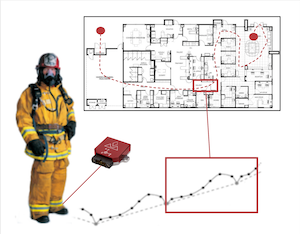

Foot-mounted IMUs can enable accurate localization of first responders in emergency scenarios.

Infrastructure-Free Human Localization

Reliable human localization is a crucial component in a number of applications such as patient monitoring, first-responder localization, and human-robot collaboration. Since GPS operates outdoors only, indoor localization requires alternative tracking methods. One alternative is low-cost and lightweight inertial measurement units (IMUs) that can be used to monitor a user’s movement by measuring their linear acceleration and angular velocity over time. An inertial navigation system (INS) uses these measurements to produce a position estimate. While traditional INS are highly error-prone, it is possible to significantly reduce error growth by mounting the IMU onto a user’s foot, which allows us to incorporate zero-velocity pseudo-measurements into our state estimate (when the foot is stationary during contact with the ground). Our research aims to enhance the accuracy of these systems by using machine learning to improve zero-velocity detection during very challenging activities such as running, crawling, and stair-climbing -

Autonomous power wheelchair navigating and mapping in an indoor environment.

Low-Cost Navigation Systems for Near-Term Assistive Applications

Simultaneous localization and mapping (SLAM) has been intensively studied by the robotics community for more than 20 years, and yet there are few commercially deployed SLAM systems operating in real-world environments. We are interested in deploying low cost, robust SLAM solutions for near-term consumer and industry applications; there are numerous challenges involved in building reliable systems under severe cost constraints. Our initial focus is on assistive devices for wheelchair navigation, with the goal of dramatically improving the mobility of users with, e.g., spinal cord injuries. There are significant opportunities to positively affect the lives of thousands of individuals, while at the same time creating advanced robotic technologies. We have demonstrated that existing power wheelchairs, retrofitted with low-cost (i.e., a cost reduction of two orders of magnitude compared to proximal solutions) navigation hardware, are able to reliably operate in highly dynamic human environments.