Vincenzo Polizzi

Ph.D. StudentDepartment: Current Students, PhD Students

I imagine a future in which autonomous systems operate reliably in complex, ever-changing environments—unfazed by drastic variations in viewpoint, illumination, or appearance. My research sits at the intersection of computer vision and robotics, where I focus on combining deep learning with 3D scene modeling to develop adaptive and efficient representations for localization and mapping.

So far, I’ve had the chance to undertake research in several world-class laboratories. This includes the Robotics and Perception Group at the University of Zurich with Prof. Davide Scaramuzza, the Vision for Robotics Lab at ETH Zurich with Prof. Margarita Chli, and the Computational Imaging Group at the University of Toronto with Prof. David B. Lindell. I also spent time as a Visiting Research Student in the Aerial Mobility Group at NASA JPL, working on the first collaborative thermal-inertial odometry system.

I completed my Bachelor’s degree in Automation Engineering at the Politecnico di Milano and earned my M.Sc. in Robotics, Systems, and Control from ETH Zurich. I am now pursuing my Ph.D. in the STARS Laboratory at the University of Toronto Institute for Aerospace Studies (UTIAS), under the supervision of Prof. Jonathan Kelly.

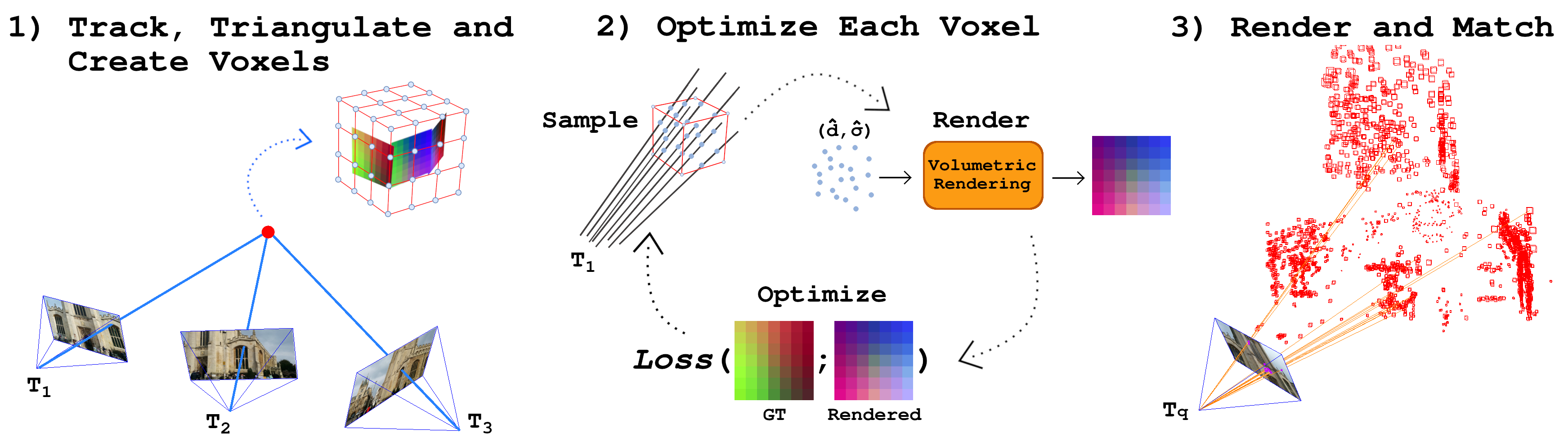

FaVoR: Features via Voxel Rendering for Camera Relocalization

Vincenzo Polizzi, Marco Cannici, Davide Scaramuzza, Jonathan Kelly

IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2025 (Oral)

Abstract: Camera relocalization methods often struggle with significant viewpoint and appearance changes. We propose FaVoR, a novel approach that leverages a globally sparse but locally dense 3D voxel representation of 2D features. By rendering descriptors from these voxels, we enable robust feature matching from novel views, significantly outperforming state-of-the-art methods in challenging indoor environments.

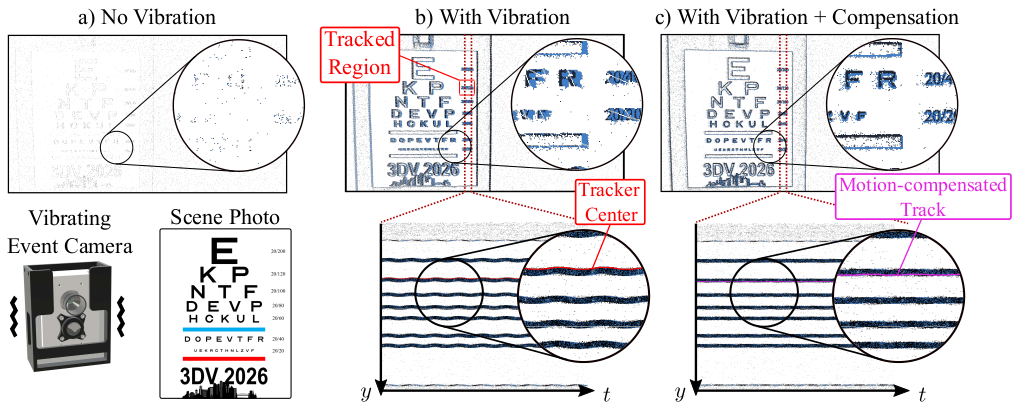

VibES: Induced Vibration for Persistent Event-based Sensing

Vincenzo Polizzi, Stephen Yang, Quentin Clark, Jonathan Kelly, Igor Gilitschenski, David B. Lindell

International Conference on 3D Vision (3DV), 2026

Abstract: Static scenes pose a challenge for event cameras, which require motion to generate data. We propose a lightweight solution using induced vibration from a rotating unbalanced mass to sustain event generation. Our pipeline compensates for this motion, providing clean events. Experiments show our approach improves image reconstruction and edge detection in static scenarios.